Creativity and innovationOne of the defining qualities of a good innovative developer is creativity and a pragmatic attitude; someone with the '

rough consensus, running code' mentality that pervades good software innovation. This can be seen as the drive to experiment, to turn inspiration and ideas into real, running code or to pathfind by trying out different things. Innovation can often happen when talking about quite separate, seemingly unrelated things, even to the point that most of the time, the 'outcomes' of an interaction are impossible to pin down.

Play, vagueness and communicationCreativity, inspiration, innovation, ideas, fun, and curiousity are all useful and important when developing software. These words convey concepts that do not thrive in situations that are purely scheduled, didactic, and teacher-pupil focussed. There needs to be an amount of '

play' in the system (

see 'Play'.) While this '

play' is bad in a tightly regimented system, it is an essential part in a creative system, to allow for new things to develop, new ideas to happen and for 'random' interactions to take place.

Alongside this notion of

play in an event, there also needs to be an amount of blank space, a

vagueness to the event. I think that we can agree that much of the usefulness of normal conferences comes from the 'coffee breaks' and 'lunch breaks', which are blank spaces of a sort. It is the recognition of this that is important and to factor it in more.

Note that if a single developer could guess at how things should best be developed in the academic space, they would have done so by now.

Pre-compartmentalisation of ideas into 'tracks' can kill potential innovation stone-dead. The distinction between CMSs, repositories and VLE developers is purely semantic and it is detrimental for people involved in one space to not overhear the developments, needs, ideas and issues in another. It is especially counter-productive to further segregate by community, such as having simultaneous Fedora, DSpace and EPrints strands at an event.

While the inherent and intended

vagueness provides the potential for cross-fertilisation of ideas, and the room for

play provides the space, the final ingredient is that of

speech, or any communication that takes place with the same ease and at the same speed of speech. While some may find the 140 character limit on twitter or identi.ca a strange constraint, this provides a target for people to really think about what they wish to convey and keeps the dialogue from becoming a series of monologues - much like the majority of emails of mailing lists - and keeps it as a dialogue between people.

Communication and DevelopersOne of the dichotomies in the necessity of communication to development is that developers can be shy, initially preferring the false anonymity of textual communication to spoken words between real people. There is a need to provide means for people to break the ice, and to strike up conversations with people that they can recognise as being of like minds. Asking that people's public online avatars are changed to be pictures of them can help people at an event find those that they have been talking to online and to start talking, face to face.

On a personal note, one of the most difficult things I have to do when meeting people out in real life is answer the question 'What do you do?' - it is much easier when I already know that the person asking the question has a technical background.

And again, going back to the concept of compartmentalisation -

developers who only deal with developers and their managers/peers will build systems that work best for their peers and their managers. If these people are not the only users then they need to widen their communications. It is important for the developers that do not use their own systems to engage with the people who actually do. They should do this directly, without the potential for garbled dialogue via layers of protocol. This part needs managing in whatever space, both to avoid dominance by loud, disgruntled users and to mitigate anti-social behaviour. By and large, I am optimistic of this process, people tend to want to be thanked, and this simple

feedback loop can be used to help motivate. Making this feedback more disproportionate (a small 'thank you' can lead to great effects) and adding in the notion of

highscore can lead to all sorts of interaction and outcomes, most notably being the rapid reinforcement of any behaviour that led to a positive outcome.

Disproportionate feedback loops and Highscores drive human behaviourI'll just digress quickly to cover what I mean be a

disproportionate feedback loop: A disproportionate feedback loop is something that encourages a certain behaviour; the input to which is something small and inexpensive, in either time or effort but the output can be large and very rewarding. This pattern can be seen in very many interactions: playing the lottery, [good] video game controls, twitter and facebook, musical instruments, the 'who wants to be a millionaire' format, mashups, posting to a blog ('free' comments, auto rss updating, a google-able webpage for each post) etc.

The

natural drive for highscores is also worth pointing out. At first glance, is it as simple as considering its use in videogames? How about the concept of getting your '5 fruit and veg a day'?

http://www.5aday.nhs.uk/topTips/default.html Running in a marathon against other people? Inbox Zero (

http://www.slideshare.net/merlinmann/inbox-zero-actionbased-email), Learning to play different musical scores? Your work being rated highly online? An innovation of yours being commented on by 5 different people in quick succession? Highscores can be very good drivers for human behaviour, addictive to some personalities.

Why not set up some software highscores? For example, in the world of repositories, how about 'Fastest UI for self-submission' - encouraging automatic metadata/datamining, a monthly prize for 'Most issue tickets handled' - to the satisfaction of those posting the tickets, and so on.

It is very easy to over-metricise this - some will purposefully abstain from this and some metrics are truely misleading. In the 90s, there was a push to have lines of code added as a metric to productivity. The false assumption is that lines of code have anything to do with producitivity - code should be lean, but not too lean to maintain.

So be very careful when adding means to record highscores - they should be flexible, and be fun - if they are no fun for the developers and/or the users, they become a pointless metric, more of an obstacle than a motivation.

The Dev8D eventPeople were free to roam and interact at the Dev8D event and there was no enforced schedule, but twitter and a loudhailer were used to make people aware of things that were going on. Talks and discussions were lined up prior to the event of course, but the event was organised on a wiki which all were free to edit. As experience has told us, the important and sometimes inspired ideas occur in relaxed and informal surroundings where people just talk and share information, such as in a typical social situation like having food and drink.

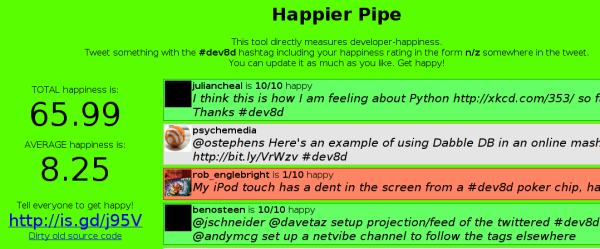

As a specific example, look

at the role of twitter at the event. Sam Easterby-Smith (

http://twitter.com/samscam) created a means to track 'developer happiness' and shared the tracking '

Happyness-o-meter' site with us all. This unplanned development inspired me to relay the infomation back to twitter and similarly led to me running an operating system/hardware survey in a very similar fashion.

To help break the ice and to encourage play, we instituted a number of ideas:

A

wordcloud on each attendees badge, consisting of whatever we could find of their work online, be it their blog or similar so that it might provide a talking point, or allow people to spot people who write about things they might be interested in learning more about.

The poker chip game - each attendee was given 5 poker chips at the start of the event, and it was encouraged that chips were to be traded for help, advice or as a way to convey a thank you. The goal was that the top 4 people ranked by amounts of chips at the end of the third day would receive a Dell mini 9 computer. The balance to this was that each chip was also worth a drink at the bar on that day too.

We were well aware that we'd left a lot of play in this particular system, allowing for lotteries to be set up, people pooling their chips, and so on. As the sole purpose of this was to encourage people to interact, to talk and bargain with each other, and to provide that feedback loop I mentioned earlier, it wasn't too important how people got the chips as long as it wasn't underhanded. It was the interaction and the 'fun' that we were after. Just as an aside, Dave Flanders deserves the credit for this particular scheme.

Developer DecathlonThe basic concept of the

Developer Decathlon was also reusing these ideas of play and feedback: "

The Developer Decathlon is a competition at dev8D that enables developers to come together face-to-face to do rapid prototyping of software ideas. [..] We help facilitate this at dev8D by providing both 'real users' and 'expert advice' on how to run these rapid prototyping sprints. [..] The 'Decathlon' part of the competition represents the '10 users' who will be available on the day to present the biggest issues they have with the apps they use and in turn to help answer developer questions as the prototypes applications are being created. The developers will have two days to work with the users in creating their prototype applications."

The best two submissions will get cash prizes that go to the individual, not to the company or institution that they are affiliated with. The outcomes of which will be made public shortly, once the judging panel have done their work.

SummaryTo foster innovation and to allow for creativity in software development:

- Having play space is important

- Being vague with aims and flexible with outcomes is not a bad thing and is vital for unexpected things to develop - e.g. A project's outcomes should be under continual re-negotiation as a general rule, not as the exception.

- Encouraging and enabling free and easy communication is crucial.

- Be aware of what drives people to do what they do. Push all feedback to be as disproportionate as possible, allowing both developers and users to benefit, with only putting a relatively trivial amount of input in (this pattern affects web UIs, development cycles, team interaction, etc)

- Choose useful highscores and be prepared to ditch them or change them if they are no longer fun and motivational.